10 Steps to your very own Corporate Artificial Intelligence project

- Adrien Book

- Aug 14, 2019

- 10 min read

Updated: May 27, 2023

The future of business goes through Artificial Intelligence. That, we know. However, creating a smart algorithm is not a given for many entrepreneurs and small businesses which lack the resources to launch a successful Artificial Intelligence program. Much like the rest of the world, Artificial Intelligence (A.I) has a 1% problem.

While (very) large corporations benefit from the vast amount of data available to them, as well as a combination of business, technological and regulatory expertise, most SMEs have no such luck.

This should however not discourage the brave and bold who seek to embark on a data-science journey. And though there are no shortcuts, there are clear steps to undertake in order to start an A.I project, which I’ve laid out below in the hope that it’ll clear up some of the mysticism surrounding the creation of an “intelligent” algorithm.

Please note that this is not meant to be a technical guide, and that definitions will be kept to a minimum for brevity's sake.

1. Formulate an Executive Strategy

Before even thinking about A.I, some key questions must be answered by a variety of key company executives : do they want to disrupt their market by creating a different type of value proposition? Do they seek to be “best in class”? Maybe their aim is to stay level on a competitive market? Or even catch up to the current leader?

These questions have to be answered BEFORE any sort of A.I project is set in motion. Operational teams will otherwise be left to aimlessly dig through data, looking for a story to tell. Given the nature of most industries, though, they would be chasing a moving target, rewriting history as the data comes in. Yes, data is fun. Yes, it’s interesting. But it serves no purpose on its own. Starting with it instead of clear goals creates only solutions in search of a problem.

Hint : A good strategy starts with disgruntled customers in mind, not technologies.

2. Identify and Prioritise Ideas

The decisions, of course, do not stop at the formulation of a strategy; strategy only answers the question “Who am I?”. “What am I doing?” is an altogether different question, and needs to be answered more operationally through the identification of use cases.

Let’s say a company’s long-term strategy is to be the most “trusted” player in its industry. It could enact this strategy by ensuring that all customer queries are answered without errors, that they are answered quickly, that all callers are greeted by a human instead of a robot… hundreds of such ideas could be (should be) systematically identified, evaluated, clustered, prioritised, and discussed in workshops or through small consulting engagements (woo!).

Some will go straight to Sales, Marketing, Accounting, HR… to potentially be dealt with using a traditional operational/statistical approach; and some will be deemed fit for a solution involving A.I, in one of its many forms. I advise looking at three specific issues to start with :

Bottlenecks : capabilities exist within the company, but are not optimally distributed.

Scaling : capabilities exist within the company, but the process for using them takes too long, too expensive, or cannot be scaled.

Resources : the company collects more data than can be currently analysed and applied by existing resources (roughly 55% of data collected by companies goes unused).

Hint : It is more productive for companies to look at A.I through the lens of business capabilities rather than technologies.

3. Ask the right questions about Data

The data question emerges immediately after an Machine Learning project (the term “Machine Learning” is much more accurate than “A.I” at this point of the conversation) is deemed operationally sound and technologically feasible. Or, should I say, the data questionS…

What type of data is needed ?

There technically isn’t a lot of different types of data : it can be numerical (historically the easiest to use due to its tabular nature), text, images, videos, sound... Just about anything that can be recorded could be deemed to be data. Ultimately, computers are agnostic about the type of input you give them; it’s all just numbers to them.

How much data is needed ?

There is no specific amount of data-points that can be prescribed as it varies wildly from project to project; but a start-up which has just launched and has no more than 300 clients probably does not naturally have the resources to launch a ML project. Furthermore, note that data can be formed of either a lot of data-point (“Big N”), a lot of details for each data point (“Big D”), or both. Both is best.

Do we have that data?

Data is either “available” or “to collect” (yes, I’m over-simplifying, sue me). Collection can either be done internally, which can be incredibly time-consuming (we’re talking months and major restructurations), or gathered through external sources (predicting umbrellas demand, for example, would use weather data freely available to all).

Hint : Unique data, rather than cutting-edge modeling, is what creates a valuable A.I solution.

4. Perform the necessary Risk Assessments

Any self-respecting project requires continuous risk-assessment in order to address issues before they arise (PMO 101). This should come early in the project, and be done thoroughly and continuously throughout its entire life-time. Below are 10 questions to ask to get started:

Do I have a SMART goal ? : “Everyone else is doing it” is a terrible reason to get into the A.I game.

Do I have enough data ? : It’s simply not possible for an algorithm to understand the present and the future without being keenly aware of the past.

Are there errors in my dataset ? : Garbage in, garbage out.

Is my dataset a d*ck ? : Data must be representative of reality, and avoid reflecting reality’s existing prejudices.

Do I have the people to make this happen ? : There are currently only 22,000 PhD-level experts worldwide capable of developing cutting edge algorithms.

Will I need to change my hierarchical structure ? : If all the project’s employees answer to different managers within different company branches, it is likely that different goals will emerge.

Will my employees become Luddites ? : “Will it replace jobs ?” and “Will I have to undergo training ?” are valid questions which need answers.

Do I have the right architecture ? : Algorithms resides within ecosystems which rely on data collection, workflow management, IaaS… itself part of a wider ecosystem made of APIs, Data storage, Cybersecurity…

Are there any regulatory hurdles ? : Checking not only current regulations, but being aware of the ones being discussed has always been key in the corporate world, and shall remain so.

Do I have time ? : If a company is in a time-sensitive crunch, A.I is probably not the answer.

Hint : Risk assessment are best performed by outside players.

5. Choose the relevant Method & Model

Once all the matters above have been settled (if not, go back to step 1), it’s time to pick the most adapted solution, technologically speaking, to address the previously identified issue.

Doing it this late in the process might seem odd, yet makes perfect sense when one realises that the technologies below are highly adaptive, and that starting with just one in mind would only shrink the horizon of possibilities. In any case, anyone with some experience in the matter will have thought of the right tool to use during the previous steps of the project.

Below is a shallow look at the 7 main categories of machine learning. Concrete examples will be given in a later article, but in the meantime can be found all around the web :

Supervised Machine Learning

Linear algorithms : LAs model predictions based on independent variables. It is mostly used for finding out the relationship between variables and forecasting, and works well for predictions in a stable environment. → More details : Linear regression, Logistic Regression, SVM, Ridge/Lasso…

Ensemble methods : Ensemble learning is a system that makes predictions based on a number of different models. By combining individual models, the ensemble model tends to be more flexible (less bias) and less data-sensitive (less variance). → More details : Random Forest, Gradient boosting, AdaBoost…

Probabilistic Classification : a probabilistic classifier is able to predict, given an observation of an input, a probability distribution over a set of classes, rather than only outputting the most likely class that the observation should belong to. → More details : Naive Bayes, Bayesian Network, MLE…

Deep Learning : For supervised learning tasks, deep learning methods make time-consuming feature engineering tasks irrelevant by translating the data into compact intermediate representations of the data to increase precision (think categories). It is great for classifying, processing and making predictions based on non-numerical data. → More details : CNN, RNN, MLP, LSTM…

Unsupervised Machine Learning

Clustering : “Clustering” is the process of grouping similar data-points together. The goal of this unsupervised machine learning technique is to find similarities in the data point and automatically group similar data points together. → More details : K-means, DB Scan, Hierarchical Clustering …

Dimensionality reduction : dimensionality reduction, aka dimension reduction is the process of reducing the number of random variables under consideration by obtaining a set of principal variables. It also makes very pretty graphs. → More details : PCA, t-SNE…

Deep learning : For unsupervised learning tasks, deep learning methods allow the categorisation of unlabeled data. Any more details would substantially lengthen this article (Soz). → More details : Reinforcement Learning, Autoencoders, GANs...

Hint : all models are wrong, but some are useful.

6. Make a BBP decision

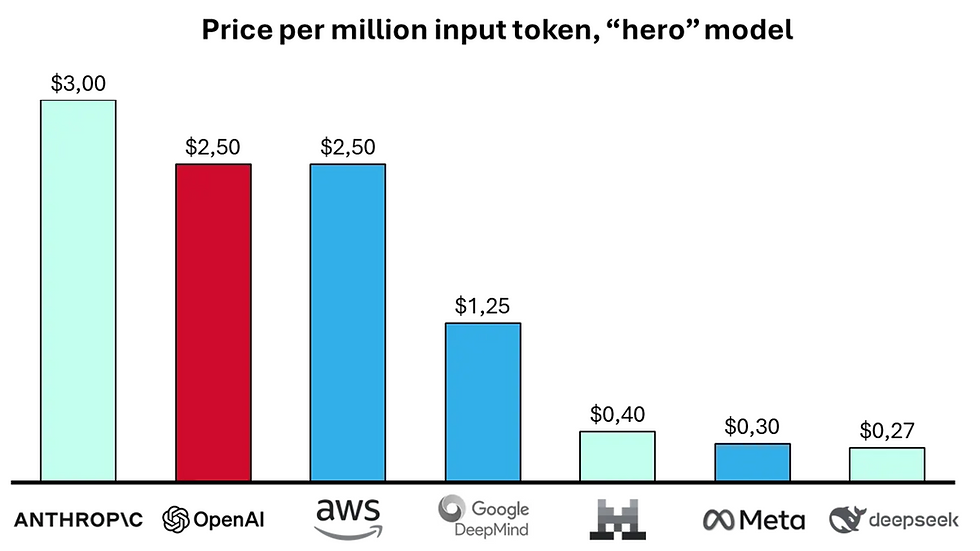

As previously mentioned, few companies can afford to fully develop their own algorithms and deploy them at a large scale unaided. The unlucky 99% have to make complicated choices regarding their ability to invest a large amount with low chances of success, or invest a smaller amount, with a high chance of success but a very sticky relationship with a provider/partner.

There are no easy answers. Just hard choices. Below are a few options :

Build

Further existing analytics capabilities within the company through a variety of technological investments and recruitment.

Further an existing application by enhancing it with cognitive applications.

Use one of the many open source algorithms available and a handful of entrepreneurial employees.

Buy

Use an existing, off-the-shelf, software from a known provider (such as Oracle, Microsoft, AWS…).

Hire an existing provider to fully develop an algorithm (specific developments however mean being completely tied to the provider. No bueno).

Partner

A win-win relationship based on a company’s data and a start-up’s technology. Yet, this type of relationship can be complicated and rarely sustainable as the various actors rarely have the same short-term/long-term goals.

Hint : BBP choices depend highly on both the executive strategy and the industry to which a company belongs.

7. Run performance checks

A recently built algorithm cannot be released into the wild untested. Indeed, most machine learning systems operate as “black boxes” (again, a useful over-simplification), and something that may have worked on one set of training data may not work as well (if at all) on another set for a variety of reasons. Thankfully, a handful of tools have been devised to ensure that models works as intended :

Classification Accuracy : number of correct predictions vs number of predictions made

Logarithmic Loss : a formula which penalizes the wrong classification of data

Confusion Matrix : similar to Accuracy, but more visual

Area under Curve : the probability that a randomly chosen positive example will be ranked higher than a randomly chosen negative example

F1 Score : dabbles in both precision and robustness

Mean Absolute Error : the average of the difference between the Original Values and the Predicted Values

Mean Squared Error : Same as above, but makes it easier to compute gradients

All the above matter insofar as they allow a team to inform a pre-prepared scorecard. External teams are also useful here as they will not be biased by the costs already sunk on the project.

Hint : beware of the “rage-to-conclude”.

8. Deploy the algorithm

The deployment phase of an A.I project is probably the most crucial of all, given the resources invested. Indeed, the transition from prototypes to production systems can be expected to be expensive and time-consuming, but should at least not run into major issues if the risk analysis was done properly (that is sadly rarely the case).

There aren’t many ways to ensure that a deployment goes smoothly beyond an efficient project management team. The two main techniques are as follows :

Silent deployment : not to be confused with a “Silent Install”, Silent deployment means running the algorithm in parallel to the historical solution to ensure that it either matches or improves its findings.

A/B testing : also known as “Split testing” or “Bucket testing”, it entails testing the new solution on only part of the population, while the other group continues on with the historical solution.

Furthermore, and though I would encourage all first-time projects to move slowly and avoid breaking things, keeping an eye out for scaling potential can often be key to future successes.

Hint : Do not be afraid of redesigning workflows around the new solution.

9. Communicate both successes and failures

Time to “Render to Caesar the things that are Caesar’s”, and share the success of the Machine Learning algorithm built with the world. If the endeavor wasn’t a success, it’s worth discussing too, if only as a learning opportunity for the entire organisation.

There are a few key groups which ought to be made aware of either successes and failures, and so for a wide variety of reasons :

Investors/shareholders : In an age when buzzwords can make or kill stocks, sharing an A.I success story will please investors, and is likely to increase the value of the company, especially if the project made it possible to develop new, useful capabilities that can have a long-term impact.

Government agencies : This of course depends on the industry and the nature of the tool used, but given the current discussions surrounding ethics and data management, gaining the trust of the public sector through transparency is probably a wise move.

Customers : Customers need to a) be educated as to what the solution can do for them, and b) be used as ambassadors for the improved service or product, hence creating a halo effect.

Candidates : Candidates need to be made aware that a company might be changing, and that their skills may need to adapt to these changes in the future. Many candidates also see working in an A.I-capable company as a positive thing, potentially increasing the applicant pool.

Employees : Most important of all, employees need to know what is being done, how this may impact them, and whether their day-ti-day life will change. People are naturally adverse to change, and letting rumors of automation go around is the surest way to ruin a good project.

Hint : Under-hyping tends to be more productive than over-hyping.

10. Tracking

I have bad news. And good news.

The bad news is that the work never ends. The good news is that the work never ends.

Indeed, letting an algorithm run unchecked for a long period of time once its put into production would be foolish. The world change, as do people and their habits, leading to their data to change. Within months, it is likely that the initial data used to train an algorithm is no longer relevant.

The challenge here is that it might be very hard to notice at first that the algorithm is no longer representative of the world and needs to be reworked using the expertise gained during the construction of version 1.0 of the algorithm. Talk about a Sisyphean task.

Hint : The world changes a lot faster than you think. And an algorithm cannot see that.

CONCLUSION

The very nature of a data-driven project is that it cannot be generic. This means spending both time and money to create something unique to cross a moat created by an early adopter. But because A.I capabilities tend to grow at an exponential rate within large companies, the moat just keeps getting wider. This is the challenge that many companies now face, and why they need a solid process to get started : their margin for error is quickly shrinking.

As such, I have a few final recommendations :

Start small.

Begin with a pilot project.

Set realistic expectations.

Focus on quick wins.

Work incrementally.

Good luck out there.

Có lần mình lướt đọc các bình luận trên mạng và thấy khá nhiều người nhắc đến https://xx88.pizza/ nên cũng nảy sinh tò mò, vào xem thử cho biết. Mình không tìm hiểu quá sâu mà chỉ dành thời gian ngắn để quan sát bố cục tổng thể và cách trình bày nội dung. Cảm nhận ban đầu là giao diện được sắp xếp khá gọn gàng, các mục phân chia rõ ràng nên việc theo dõi và nắm thông tin tương đối dễ dàng.

Mình thường ưu tiên những bài giới thiệu nền tảng giải trí có cách trình bày gọn gàng, đọc nhanh trên màn hình nhỏ mà vẫn nắm được ý chính. Với https://xx88.us/, nội dung được triển khai khá cân đối, phần nhắc đến nền tảng nằm ở giữa bài nên mạch đọc tự nhiên, không bị nặng thông tin ngay từ đầu. Bài viết tập trung mô tả trải nghiệm tổng thể theo hướng đơn giản, dễ thao tác, kèm theo các dòng trò chơi quen thuộc để người xem dễ hình dung. Cách diễn đạt vừa phải, không lan man, tạo cảm giác nhẹ nhàng và mang tính tham khảo hơn là giới thiệu dồn dập.

Trong quá trình đọc các thảo luận, mình có để ý thấy đá gà cm88 được nhắc qua nên thử vào xem cho biết. Mình chỉ xem nhanh tổng thể chứ chưa tìm hiểu sâu, nhưng cảm giác ban đầu là cách trình bày khá thoáng, bố cục rõ ràng, nhìn vào không bị rối mắt.

Khi xem lại một vài nội dung đang được bàn luận, mình tình cờ thấy kjc365.com nên tò mò mở ra xem thử, mình chỉ lướt nhanh để nắm cách trình bày tổng thể chứ chưa đọc kỹ, cảm giác ban đầu là bố cục được sắp xếp khá ổn và nhìn vào cũng dễ theo dõi.

Really impressed with the quality and unique designs from <a href="https://chromeheart.ca/">Chrome Hearts</a>. The craftsmanship and style truly stand out—definitely worth checking out for anyone who loves premium streetwear.