Why AI Projects Fail, and How We Can Stop the Madness

- Adrien Book

- Oct 27, 2024

- 6 min read

Ready for a meaningless statement? Here goes: AI is everywhere, transforming industries from healthcare to defense. Private-sector AI investments shot up by 1,700% between 2013 and 2022 (probably a lot more since). Military AI budgets aren’t shy either, with the U.S. Department of Defense spending $1.8 billion annually on it. But, despite all the hype, it turns out that AI is not the one-stop fix to all the world’s problems that some have been hoping for. In fact, by 2022, over 4 out of 5 AI projects failed according to research conducted in Germany. This failure rate is twice that of conventional IT projects.

Amidst an abundance of seemingly misguided spending, the RAND Corporation (an American think tank) published “The Root Causes of Failure for Artificial Intelligence Projects and How They Can Succeed: Avoiding the Anti-Patterns of AI.” Written by James Ryseff, Brandon De Bruhl, and Sydne J. Newberry, the report offers a look at why AI projects flop and what needs to change for them to actually deliver on their promises.

Why AI projects are failing

The research is based on interviews with 65 AI practitioners across industry and academia. Participants had at least five years of experience in building machine learning models, and they represented diverse industries and company sizes. By diving deep into the experiences of these practitioners, the paper seeks to understand why AI projects fail and identify actionable lessons. Obviously, this does not yield the most robust study (interviews are anecdotal by definition). But it is enough to distill the key reasons for failure into five categories (none of which are very surprising).

1. Misaligned goals

Organizations often fail to articulate what they actually want to achieve with AI. That leads them to deploy models that either optimize for the wrong metrics or simply don’t fit into business workflows. “What if we do a GenAI” said every board room for the past year, without any further thought.

An example: a company might want to implement a simple LLM to hire more efficiently. But HR simply says “we get too many CVs, and it takes us too long to select the right ones”. What the tech team might hear is “we get too many CVs”, and build an algorithm that uses previously rejected candidates to automatically reject new candidates with the same profile. This reduces the workload… but does not further help choose the best candidate for a role (on top of obvious ethical concrens around bias).

Communication is crucial. Not only that : understanding one another when we communicate in a cororate seeting is crucial. Establishing a shared understanding of project goals (particularly between technical and leadership teams) can make the difference between success and failure.

2. Data challenges

AI models rely on data (duh), and if an organization lacks high-quality data, its models will not succeed. To go back to the HR / recruiting example : if a company wants to pick better candidates, but has been recruiting without commmon benchmarks across recruiters, there may be very little commonalities between CVs that make it through. And that means an algorithm trained on data may as well be making decisions at random.

Sometimes, the data might even be there, at the right level of quality… but in an unusable state. A bunch of PDFs can be used, but they have to be formatted in somewhat the same way to be useful (most times). And that takes work. And people are lazy. And that leads to bad algorithms.

Ensuring the quality is there before a project launches is key to its success. This should be obvious, yet here we are. I have no advice here other than… think. Hard. Make an effort.

3. Infrastructure gaps

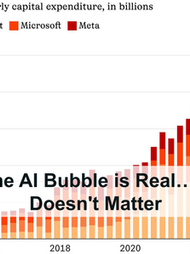

Organizations often don’t invest enough in infrastructure to support data governance and model deployment. By this, I mean that there are not enough chips and servers and racks in a cold building somewhere near to make A) the math add up for the most advanced models and / or B) use the freshly trained model in a way that is both quick and using as little energy as possible (what is known as inferencing). This leads to unworkable products… and failure

Of course, this is happening less now as hyperscalers (Meta, Microsoft, AWS…) and colocations (Equinix, Iron Mountain…) expend their “Compute as a service” offers, but many companies still fall prey to it.

4. Technical limitations

AI, for all its wonders, has limits. Misapplying it to problems that can’t be solved with today’s technology is a recipe for disaster. For example, you can’t use it to predict the future, only to see what happened in the past, and have fun with variables. And yet… a lot of company seem to want to use it to predict the future. Hilarity often ensues. Because predictive power isn’t really predictive if it’s based off of just a couple thousand data-points…

Leaders need to understand these limits and set realistic expectations to prevent disappointment and wasted resources. More on that below.

5. Shiny new toy syndrome

Even if the goals are aligned, the data exists, the infrastructure has been buit, and AI is capable of doing what we want it to… there are still ways to misuse AI.

Focusing on using the latest technology rather than solving a real problem for users often leads to spectacular failures. AI isn’t magic — it’s just another tool. I see it a lot today : companies wanting GenAI when what they actually need is just a Spicy Multiple Regression… Being “problem-centric” is key. Successful AI projects focus on solving real problems, not just on implementing the latest technologies.

These findings illustrate that a combination of unrealistic expectations, a lack of preparation, and a poor understanding of AI’s capabilities are the main ingredients in the stew of failure.

How to prevent AI project failures

To remedy these issues, organizations should check off five elements from whatever checklist they’re using to launch AI projects.

Ensure clear communication between teams

Miscommunication is the leading cause of failure ( in relationships too, FYI). Businesses should set clear, realistic goals and ensure technical teams understand these goals in context. Set regular check-ins where both sides can align on progress. According to a study by McKinsey, AI projects that foster regular communication between stakeholders and technical teams are 2.5 times more likely to succeed.

Commit to long-term solutions

AI projects need time. Leaders should ensure that any AI project is deemed worthy of at least a year-long commitment. No commitment? No project. Gartner reports that successful AI projects typically require 12 to 18 months to show tangible results. Quick wins are rare, and sustained commitment is necessary for AI to yield value.

Focus on business problems

Leaders need to move away from seeing AI as a magic bullet. They should focus on business issues and solve them with the best available technology. Sometimes that’s AI, sometimes it’s not. Companies that focus on solving specific business problems with AI, rather than chasing technology trends, will likely see a better return on investment.

Invest in data infrastructure

Organizations should spend money on data governance, pipelines, and model deployment. AI isn’t plug-and-play: it needs a foundation to succeed. That’s why Nvidia partnered with Accenture, for example. Proper data governance ensures that models are trained on reliable, high-quality data, leading to better outcomes.

Know AI’s limits

Businesses need to be aware of what AI can and can’t do. Engaging technical experts early in the project can prevent embarrassing failures. In fact, I’m fairly sure most failed AI projects are due to unrealistic expectations from leadership, driven by misconceptions about AI’s current capabilities. Involving subject matter experts can provide a reality check and set feasible targets. An adult in the room that isn’t fully AI-pilled often helps, too. I’m available.

The (many) flaws in the study

While the paper does a great job of summarizing key reasons for AI project failures, it leaves a few gaps. Firstly, the perspectives gathered primarily come from non-managerial engineers. This introduces the risk of skewed findings — rooting more blame in leadership failures and not fully considering technical missteps by engineers themselves.

Additionally, the sample size is relatively small. With 65 participants, the study may not fully capture the breadth of experiences necessary to generalize to the entire AI industry. Future research could expand the dataset, include more leadership perspectives, and incorporate longitudinal studies to see how projects evolve over time.

Finally, and more importantly, none of the authors are professors or academics. Hell, Sydne has a medical background. So everything written needs to be taken with a grain of salt. I was halfway through the paper when I realized I’d rather be reading the McKinsey 2024 AI report. Check that instead.

AI may deliver on its many promises… But only if we get real

The future of AI doesn’t have to be a graveyard of expensive failed projects. With clearer goals, more thoughtful investment, and a grounded understanding of AI’s true abilities, these projects can begin to deliver. We need fewer projects motivated by FOMO and more motivated by actual need. If leaders listen to their technical teams, align on realistic goals, and invest in necessary infrastructure, AI can finally go from disappointing buzzword to an impactful solution.

And who knows? Maybe, just maybe, those billions of dollars won’t be spent on moon-shots that crash and burn.

Good luck out there.

AI projects fail. A lot. Despite massive investments, cutting-edge tools, and talented teams, many AI initiatives stall, miss expectations, or quietly die. When that happens, most leaders blame the technology, the data, or the team. Before you scale models, pipelines, or infrastructure, you need to scale yourself. Look this article on mental strength . It isn’t a “soft skill” in AI leadership—it’s a survival skill. AI projects often fail because the people leading them aren’t mentally prepared to succeed.

شيخ روحاني

شيخ روحاني

رقم شيخ روحاني

شيخ روحاني لجلب الحبيب

الشيخ الروحاني

الشيخ الروحاني

شيخ روحاني سعودي

رقم شيخ روحاني

شيخ روحاني مضمون

Berlinintim

Berlin Intim

جلب الحبيب

سكس العرب

https://www.eljnoub.com/

https://hurenberlin.com/

جلب الحبيب بالشمعة

Best essay writing service

Essay service

Essay writing service reddit

Best essay writing service reddit

Do my essay

Write my essay

Buy essays online

Great insights, Adrien! Just like AI projects, healthcare systems need precision and adaptability to succeed. At Doctor Management Services, a trusted chiropractic billing company, we focus on accuracy and smart process optimization to help medical practices achieve consistent, reliable results.

Thank you for your wonderful posts, blogger! I always enjoy your content and hope this site continues to thrive. I'd also like to share a game I've been playing a lot lately: Drift Boss play online.