Simple Answers to Artificial Intelligence’s FAQs

- Adrien Book

- Nov 15, 2018

- 7 min read

Updated: Dec 31, 2022

What is artificial Intelligence ? What are some examples of artificial Intelligence ? Should we trust it ? What are the dangers of Artificial Intelligence ? What's next ? Those are all important questions, which we answer below.

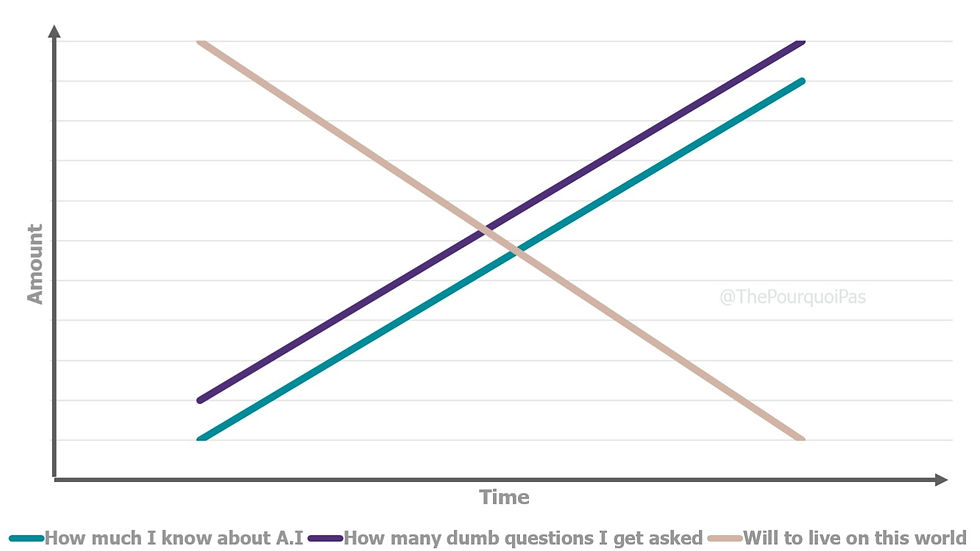

Regularly receiving queries about Artificial Intelligence is of course flattering, but can also be bothersome, as some of the most regularly asked questions could easily be answered via a quick Google search. A number of people are however wary of doing so, partly because of movies’ unrealistic image of A.I (among other unrealistic expectations), and the current startup culture which venerates coders and techies, putting them and their knowledge on a pedestal.

With that in mind, below is a simple, quick and no-nonsense look at A.I through the lens of the questions I most frequently get asked.

“What is your own definition of Artificial Intelligence?”

A.I is not a mere constellation of processes and technologies, but also a business topic and an academic matter, while also being an ethics concern (among other things). I’d argue that to keep things very easy you could define A.I in three ways :

Symbolic A.I : a simple algorithm which is able to make decisions based on predefined parameters and expected actions. These are mere “if-statement” and far from what academics would call artificial intelligence. Nevertheless, it is, in a way, intelligence: if I see a snake, I run. If I see cake, I salivate. The main difference is that I know why I’m running (experience, fear…), and why I’m salivating (hunger, potential sugar-high which would hit the reward center of the brain…). A symbolic A.I has no idea of the why and how, it’s just automated to follow procedure.

Machine Learning : this refers to an algorithm which also follows procedure but on a deeper level. When “fed” enough data (and we’re talking very, very large amounts), it can potentially draw inferences which a human might not be able to draw in his/her lifetime (a.k.a unsupervised machine learning, a.k.a clustering). Beyond this, we’re able to create tools which learn and adapt from this data through rewards (a.k.a reinforcement learning), and even tools able to identify and categorise unstructured data such as images or speech (a.k.a deep learning). Though incredible, these technological advances only apply to very specific, easily automated tasks. For now, that is.

General Artificial Intelligence : This is what you see in movies (i, Robot, Ex Machina, 2001 A Space Odyssey…). It’s an algorithm which could not only learn from experience but could also transfer that knowledge from one very specific task to another (you can read this on a computer and could potentially read my handwriting, but at the moment an A.I could only do one of the two). Alternatively, you could look at it this way : a modern A.I could make a very accurate prediction based on data, but would need a (comparatively statistically challenged) human to infer meaning (the good old causation vs correlation debate). A general artificial intelligence could do both, but is oh so far away from ever being developed.

“Do we already use it in our daily lives?”

I mean, yes, of course. We shop on Amazon, we take Ubers, we use Google, we send Gmails, we fly in planes… we all use A.I everyday, and it would be very hard to find someone not using such algorithms in their daily lives. Search results, newsfeeds, digital advertisement, platform moderation, friends/products recommendations… It all is impacted by companies leveraging data to inform softwares.

Individuals, however, are unlikely to leverage such a tool themselves though, due to the massive amount of data necessary to create a convincing A.I.

“When will we get the happy-fun-times robots?”

That’s a robotics questions. Not an A.I question. Get your head out of the gutters.

“Should we trust A.I?”

A.I is not something to be trusted or not trusted. It is merely a man-made tool which is “fed” data in order to automate certain tasks, at scale.

Do you trust your washing-machine?

Your calculator?

Yeah, me neither.

Math is black magic.

The only thing we should be wary of (for now) is the quality of the software’s design and the quality of the data “fed”. Ensuring both of these elements’ quality is however easier said than done. As such, this question should be rephrased as “do we trust (insert company’s name here)’s managers have our best interest at heart?” and, if yes, “do we trust the company’s programmers to implement that vision flawlessly while taking into account potential data flaws?”.

That’s trickier, isn’t it? But more realistic.

“More generally, should I be frightened of this new technology?”

First of all, forget the LONG think-pieces about General Artificial Intelligence (go home Kissinger, you’re drunk). We’re not there yet and the chances we ever will be are incredibly small (I’m a realist, sue me). Nevertheless, though the technology itself is more or less value-neutral, as explained above, the way it’s implemented may have terrifying ramifications.

Right to self-determination : Through a basic understanding of Nudge Theory, a potentially hostile actor could manipulate content displays on platforms and personalisation enough to influence a statistically significant amount of people towards a pre-determined nefarious goal (hey, remember when Russia used social media to get Trump elected?).

Right to freedom of opinion : Put simply, A.I could (and can) identify and target specific opinions on the internet. For advertising purpose? Yes, for advertising purpose said Erdogan and Putin. This could become a nightmare if the mechanisms of this identification and targeting are not well understood. Hey remember when Facebook labeled 65,000 Russian users as ‘interested in treason’?

Right to privacy : yeah, no, we can forget about that. Oh, the Americans already have? cool, cool. Hey, remember when a facial recognition software could tell your sexual orientation? In Russia? Speaking of…

Obligation of non-discrimination : Hey, remember when a facial recognition software could tell your sexual orientation? In Russia? Oh I already… Soz, seemed important.

Of course, all the above does not take into account the whole automation issue. See more below.

“Is A.I an opportunity for businesses or a threat to the traditional channels and employees?”

Why can’t it be both? This technology would not be under development if there was no benefit to reap from it: it is an opportunity for corporate teams to increase margins and use their customers’ data to better serve them (how we go from there depends on your view of capitalism as a system). However, this will absolutely mean that many people WILL lose their jobs in the process.

“But who, who will lose their jobs?” I hear you chant.

Well, it’s complicated. Many thousands of people have already lost their jobs, or were simply not replaced when they left, as technology is not a sudden surge and happens in waves. In a recent article for Harvard Business Review, one of the most influential AI researchers Andrew Ng offers this rule of thumb: “if a typical person can do a mental task with less than one second of thought, we can probably automate it using AI either now or in the near future.”

I however believe that in the long run A.I will assist us more than replace us, and ultimately free more time for grander endeavors : washing-machines and Hoovers didn’t replace women, they made it so that they could enter the workforce, ushering nearly 30 years of economic growth.

“Should my business follow or lead?”

I’ve got good news and I’ve got bad news.

The good news is that the decision has already been made : there are already A.I leaders (Google, Uber, Facebook, Amazon, JD, Alibaba…), and most brands are now just trying to follow. The bad news is that the decision has already been made : there are already A.I leaders and you’re now just playing catch-up. And sadly, in this instance, the world belongs to early adopter.

In many ways, A.I is a zero-sum-game : the first to adapt a sustainable A.I strategy is able to create a moat deep enough to protect itself from future competitors.

That however doesn’t mean that an A.I strategy shouldn’t be implemented. On the contrary…

“I want to use machine learning for my business: how do I start?”

First you’d need to assess what data you have, how much of it you have, and what tasks needs to be done : machine learning works best with very large data-sets and highly specialised tasks. Then you’d need to hire data scientists (and a consulting firm, hello there) in order to put new processes in place. Then, as a non-technical manager your role is to know the answer to three questions about your new algorithm:

“What data does it use? What is it good at? What should it never do?”

That’s it. The rest is for subordinates to take care of.

Then comes the boring part: you probably should be thinking about how to manage the ethical, legal, and business risks involved if something goes wrong. But there simply isn’t an industry standard framework for thinking about these kinds of issues. Nevertheless, one very common step involves checks and balances within the data scientist teams. Others are more thorough:

Document the A.I model’s intended use, data requirements, specific feature requirements, and where personal data is used and how it’s protected.

Take steps to understand and minimize unwanted biases

Continuous monitoring by external actors such as consultants (hi!)

Knowing how and when to pull the plug and what that would mean for the business

“What’s next for the technology?”

Are we on the path to Artificial General Intelligence? No, not even a little bit. Machine learning, in fact, is a rather dull affair. The technology has been around since the 1990s, and the academic premises for it since the 1970s. What’s new, however, is the advancement and combination of big data, storage power and computing power.

In fact, A.I breakthroughs have become sparse, and seem to require ever-larger amounts of capital, data and computing power. The latest progress in A.I has been less science than engineering, even tinkering; indeed, correlation and association can only go so far, compared to organic causal learning, highlighting a potential need for the field to start over. Researchers have largely abandoned forward-thinking research and are instead concentrating on the practical applications of what is known so far, which could advance humanity in major ways, though it would provide few leaps for A.I science.

AI stuff always trips me out! This was a helpful breakdown, though. But I'm still way more focused on other things, like if there's a Steal a Brainrot Calculator for Roblox that actually works! Gotta min/max those gains, ya know?

Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips Tips…

The Contour Airlines Charlotte terminal is located at Charlotte Douglas International Airport (CLT) Main Terminal. Offering convenient check-in, efficient baggage services, and essential traveler amenities, the terminal ensures a hassle-free experience. Whether you're arriving or departing, Contour Airlines passengers at CLT enjoy smooth operations, accessible services, and quick connections at one of the nation's busiest airports.

https://www.terminalease.com/lufthansa-airlines/lufthansa-airlines-mad-terminal/

https://www.terminalease.com/delta-airlines/delta-airlines-orf-terminal/

https://www.terminalease.com/emirates-airlines/emirates-airlines-jfk-terminal/

If u need Most TOP Call Girls in Islamabad so Than Call me. I am Provide u Best Escorts in Islamabad. So Call Me and Book. 03266066644

It is crucial to understand green coffee capsule side effects in hindi, especially if you have sensitivity to caffeine.