AI's energy consumption is a growing barrier to sustainable tech progress

- Adrien Book

- Dec 8, 2023

- 3 min read

Updated: Dec 22, 2023

In the rapidly evolving AI landscape, the focus has long been on technological advancements and breakthroughs (e/acc, anyone?). We want AIs that think like humans, and we want them now, damn it. However, this relentless pursuit of innovation often overlooks a critical aspect: the environmental impact.

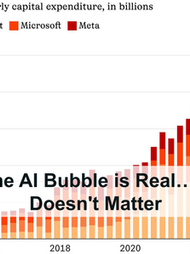

As Gen. AI tools like chatGPT become increasingly integral to our daily lives, their energy consumption and carbon footprint have emerged as pressing issues. Between 2017 and 2021, the electricity used by major cloud compute providers like Meta, Amazon, Microsoft, and Google more than doubled, contributing significantly to greenhouse gas emissions.

This sets the stage for a new study on the matter, titled “Power Hungry Processing: Watts Driving the Cost of AI Deployment?” (great title), and written by Sasha Luccioni, Yacine Jernite (Both Hugging Face employees), and Emma Strubell (of Carnegie Mellon University & Allen Institute for AI).

The paper offers an in-depth analysis of the energy consumption and carbon emissions associated with AI, specifically during the model inference (ie: deployment / public use) phase. The authors compared the environmental costs of both task-specific (fine-tuned for single tasks) and multi-purpose (trained for multiple tasks) AI models. Their methodology included testing 88 models across ten different tasks using various datasets, and measuring the energy required and carbon emitted for 1000 inferences.

In short, the study finds that multi-purpose generative architectures like ChatGPT and MidJourney are significantly more energy-intensive than task-specific systems across various tasks. This discrepancy raises concerns about the growing trend of deploying versatile, yet resource-heavy, AI systems without fully considering their environmental impact. More below.

Key takeaways from the study

Multi-purpose Generative AI models like ChatGPT and MidJourney, while versatile, are significantly more energy and carbon-intensive compared to task-specific models.

Image-related AI tasks consume more energy than text-based tasks (which seems obvious in hindsight).

There is an uneven trade-off between the benefits of multi-purpose AI systems and their environmental costs.

The study’s authors urge a reassessment of deploying multi-purpose models for tasks where smaller, task-specific models could suffice.

What do we do with that information?

Both companies, governments and consumers have a clear path ahead. They just need to walk it.

For companies

Optimize AI’s “4Ms”: Researchers from Google and UC Berkeley have highlighted the concept of the “4Ms” of machine learning which includes Model, Machine, Mechanization, and Map. Optimizing these factors can significantly reduce the carbon emissions and energy use of ML systems

For governments

Develop better policies and regulations: Current AI regulations often overlook environmental sustainability. However, the EU AI Act now classifies environmental harm as a high-risk area, requiring transparency and disclosure measures from AI model providers. As I’ve often argued, the rest of the world should follow suit. The compliance of data centers, in particular, should be carefully assessed. Meanwhile, governments should encourage research and development of more energy-efficient AI architectures.

Support standardized reporting / certifications: There is no current process for certifying AI model sustainability. However, a rating system similar to the Leadership in Energy and Environmental Design (LEED) in construction could be developed for AI models, considering factors like model architecture and data center location

For consumers

Prioritize task-specific AI models for well-defined tasks to reduce energy consumption. For example, if you need a language translation tool, choose one that specializes in translations rather than a multi-purpose model. If you can recycle, you can do that too.

Advocate for change: Consider joining or supporting organizations and initiatives that advocate for responsible AI usage and environmental sustainability. Your voice can contribute to collective efforts in holding companies and governments accountable.

Too soon to draw conclusions

While enriching, the paper does have a few limitations. Primarily, its focus on a limited set of AI models and tasks, which might not represent the entire spectrum of AI deployments. Additionally, there is a need for more comprehensive data on the energy consumption of different AI models throughout their lifecycle, including training and deployment phases. As researchers often like to say… “more research is needed”.

The study by Luccioni et al serves as a crucial wake-up call to the AI community, highlighting the need to balance technological advancements with environmental sustainability.

It underscores the importance of conscious decision-making in AI deployments, keeping in mind their long-term ecological implications. As we continue to harness the power of AI, it’s imperative to do so with a keen awareness of our environmental responsibilities, fostering a future where innovation and sustainability coexist harmoniously.

Good luck out there.

Really inspiring to see organizations like Farm Share stepping up so quickly to support communities affected by Hurricane Idalia. In difficult times like these, access to the right information and helpful online resources also matters a lot. Platforms like GroupSor are doing a great job by organizing useful links, community updates, and digital resources in one place, making it easier for people to stay informed and connected. Appreciating all efforts—both on the ground and online—that help people recover and move forward.

Explore new levels of intimacy with our high-quality anal beads. Designed for safety and pleasure, they provide smooth, gradual stimulation. Ideal for solo or partner play. Shop online now and indulge in exciting, unforgettable sensations that awaken desire.

Dive into the chaotic world of Ragdoll Archers! This game lets you challenge friends or AI in hilarious physics-based battles. Master your bow, launch your ragdoll character, and dominate the arena. Play Ragdoll Archers online for a ridiculous showdown where anything can happen!

Thank you for your wonderful posts, blogger! I always enjoy your content and hope this site continues to thrive. I'd also like to share a game I've been playing a lot lately: Drift Boss play online.

Drive Mad unblocked isn’t just fun—it’s a heart-racing driving test: get your tiny car to the finish, and while picking up the game is simple, the unexpected hurdles in each level make mastering these crazy tracks a true adventure!